- cross-posted to:

- [email protected]

- cross-posted to:

- [email protected]

The narrative that OpenAI, Microsoft, and freshly minted White House “AI czar” David Sacks are now pushing to explain why DeepSeek was able to create a large language model that outpaces OpenAI’s while spending orders of magnitude less money and using older chips is that DeepSeek used OpenAI’s data unfairly and without compensation. Sound familiar?

Both Bloomberg and the Financial Times are reporting that Microsoft and OpenAI have been probing whether DeepSeek improperly trained the R1 model that is taking the AI world by storm on the outputs of OpenAI models.

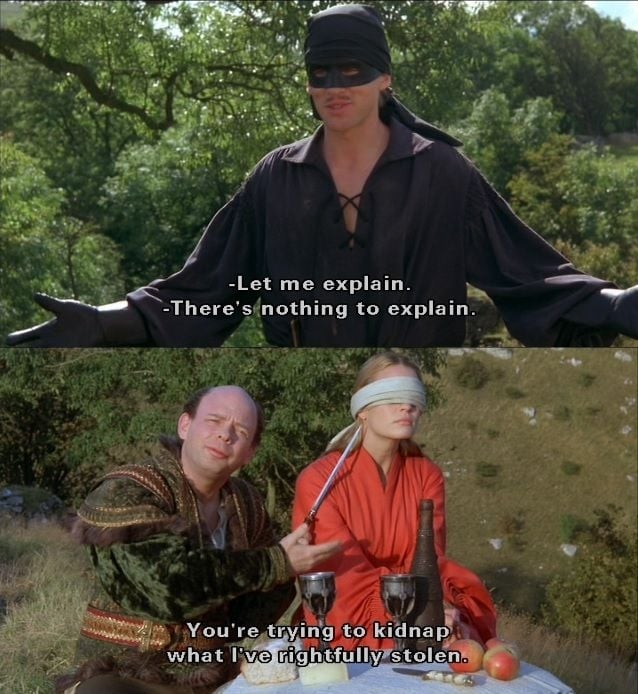

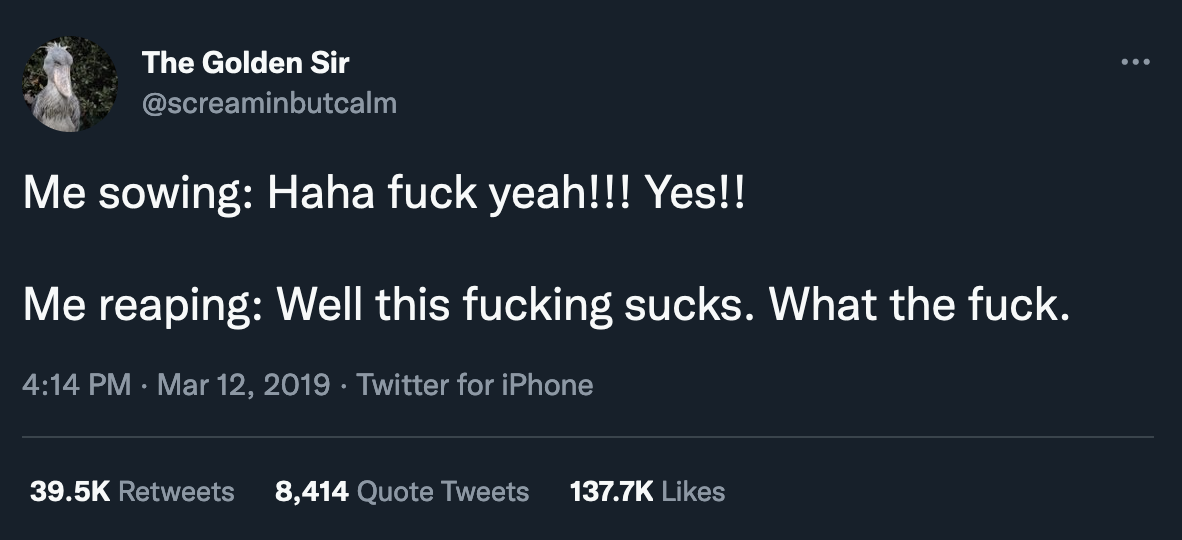

It is, as many have already pointed out, incredibly ironic that OpenAI, a company that has been obtaining large amounts of data from all of humankind largely in an “unauthorized manner,” and, in some cases, in violation of the terms of service of those from whom they have been taking from, is now complaining about the very practices by which it has built its company.

OpenAI is currently being sued by the New York Times for training on its articles, and its argument is that this is perfectly fine under copyright law fair use protections.

“Training AI models using publicly available internet materials is fair use, as supported by long-standing and widely accepted precedents. We view this principle as fair to creators, necessary for innovators, and critical for US competitiveness,” OpenAI wrote in a blog post. In its motion to dismiss in court, OpenAI wrote “it has long been clear that the non-consumptive use of copyrighted material (like large language model training) is protected by fair use.”

OpenAI argues that it is legal for the company to train on whatever it wants for whatever reason it wants, then it stands to reason that it doesn’t have much of a leg to stand on when competitors use common strategies used in the world of machine learning to make their own models.

It is effing hilarious. First, OpenAI & friends steal creative works to “train” their LLMs. Then they are insanely hyped for what amounts to glorified statistics, get “valued” at insane amounts while burning money faster than a Californian forest fire. Then, a competitor appears that has the same evil energy but slightly better statistics… bam. A trillion of “value” just evaporates as if it never existed.

And then suddenly people are complaining that DeepSuck is “not privacy friendly” and stealing from OpenAI. Hahaha. Fuck this timeline.It never did exist. This is the problem with the stock market.

I hear tulip bulbs are a good investment…

How much for two thousands?

Tree fiddy 🦕

Nah bitcoin is the future

Edit: /s I was trying to say bitcoin = tulips

Capitalism basics, competition of exploitation

You know what else isn’t privacy friendly? Like all of social media.

You can also just run deepseek locally if you are really concerned about privacy. I did it on my 4070ti with the 14b distillation last night. There’s a reddit thread floating around that described how to do with with ollama and a chatbot program.

That is true, and running locally is better in that respect. My point was more that privacy was hardly ever an issue until suddenly now.

Absolutely! I was just expanding on what you said for others who come across the thread :)

Wasn’t zuck the cuck saying “privacy is dead” a few years ago 🙄

I’m an AI/comp-sci novice, so forgive me if this is a dumb question, but does running the program locally allow you to better control the information that it trains on? I’m a college chemistry instructor that has to write lots of curriculum, assingments and lab protocols; if I ran deepseeks locally and fed it all my chemistry textbooks and previous syllabi and assignments, would I get better results when asking it to write a lab procedure? And could I then train it to cite specific sources when it does so?

I’m not all that knowledgeable either lol it is my understanding though that what you download, the “model,” is the results of their training. You would need some other way to train it. I’m not sure how you would go about doing that though. The model is essentially the “product” that is created from the training.

but does running the program locally allow you to better control the information that it trains on?

in a sense: if you don’t let it connect to the internet, it won’t be able to take your data to the creators

It just gets better and better y’all.

https://www.theregister.com/2025/01/30/deepseek_database_left_open/

@whostosay I know they’re being touted as having done very much with very little, but this kind of thing should have been part of the little.

I’m not understanding your reply, do you mind rephrasing?

I feel like I didn’t appreciate this movie enough when I first watched it but it only gets better as I get older

“Now” is always a good time to rewatch it & get more out of it!

It’s a true comedy that still holds up. I honestly thought for years that Mel Brooks had something to do with it, but he didn’t. It’s so well crafted that there are many layers to it that you can’t even grasp when watching as a child. Seeing it as an adult just open your eyes to how amazingly well done it was.

I could do without the whole Billy Crystalizing of large portions of it though.

I always thought Rob Reiner had a similar sense of humor to Mel Brooks. And I liked Billy Crystal in it, it kept that section of the movie from feeling too heavy, though I get it’s not everyone’s thing.

For anyone who hasn’t read it, the book is fantastic as well, and helped me appreciate the movie even more (it’s probably one of the best film adaptations of a book ever, IMO). The humor and wit of William Goldman was captured expertly in the movie.

I didn’t realize it was a book. Guess I’ll be searching that out.

Rob Reiner’s dad Carl was best friends with Mel Brooks for almost all of Carl’s adult life.

https://www.vanityfair.com/hollywood/2020/06/carl-reiner-mel-brooks-friendship

👏👏👏👏👏

Tamaleeeeeeeeesssssss

hot hot hot hot tamaleeeeeeeees

If these guys thought they could out-bootleg the fucking Chinese then I have an unlicensed t-shirt of Nicky Mouse with their name on it.

The thing is chinese did not just bootleg… they took what was out there and made it better.

Their shit is now likely objectively “better” (TBD tho we need sometime)… American parasites in shambles asking Daddy sam to intervene after they already block nvidia GPUs and shit.

Still got cucked and now crying about it to the world. Pathetic.

They also already rolled back Biden admin’s order for AI protections. So they don’t even have the benefit of those. There’s supposedly a Trump admin AI order now in place but it doesn’t have the same scope at all. So Altman and pals may just be SOL. There’s no regulatory body to tell except the courts and China literally doesn’t care about those.

DeepSeek’s specific trained model is immaterial—they could take it down tomorrow and never provide access again, and the damage to OpenAI’s business would already be done.

DeepSeek’s model is just a proof-of-concept—the point is that any organization with a few million dollars and some (hopefully less-problematical) training data can now make their own model competitive with OpenAI’s.

Deepseek can’t take down the model, it’s already been published and is mostly open source. Open source llms are the way, fuck closedAI

Right—by “take it down” I just meant take down online access to their own running instance of it.

I suspect that most usage of the model is going to be companies and individuals running their own instance of it. They have some smaller distilled models based on Llama and Qwen that can run on consumer-grade hardware.

Imagine if a little bit of those so many millions that so many companies are willing to throw away to the shit ai bubble was actually directed to anything useful.

… assuming deepseek is telling the truth, something they have plenty of incentives to lie about

deleted by creator

Corporate media take note. This is how you do reality-based reporting. None of the both-sides bullshit trying to justify or make excuses, just laughing in the face of absurd hypocrisy. This is a well-respected journalist confronting a truth we can all plainly see. See? The truth doesn’t need to be boring or bland or “balanced” by disingenuous attempts to see the other side.

I will explain what this means in a moment, but first: Hahahahahahahahahahahahahahahaha hahahhahahahahahahahahahahaha. It is, as many have already pointed out, incredibly ironic that OpenAI, a company that has been obtaining large amounts of data from all of humankind largely in an “unauthorized manner,” and, in some cases, in violation of the terms of service of those from whom they have been taking from, is now complaining about the very practices by which it has built its company.

Good that 404 are unafraid of tackling issues, but tbh i find the “hahaha” unprofessional and dispense with the informal tone in news.

I definitely understand that reaction. It does give off a whiff of unprofessionalism, but their reporting is so consistently solid that I’m willing to give them the space to be a little more human than other journalists. If it ever got in the way of their actual journalism I’d say they should quit it, but that hasn’t happened so far.

It’s just… So deserved, you know? Sometimes you can’t but laugh in the face of such karma and fucking irony.

I love how die hard free market defenders turn into fuming protectionists the second their hegemony is threatened.

Tale as old as capitalism.

the Chinese realised OpenAI forgot to open source their model and methodology so they just open sourced it for them 😂

Intellectual property theft for me but not for thee!

Regardless of how OpenAI procured their data, I’m absolutely shocked that a company from China would obtain data unauthorized from a company in another country.

It’s a shame that you can’t copyright the output of AI, isn’t it?

Trump executive order on the copyrightability of AI output in 3…

so? it won’t have any effect on china, because last i checked, us laws apply only in the us

No honor among thieves.

There’s plenty of honor in Deepseek releasing open source.

explain why DeepSeek was able to create

Surely they also received tons of plutonium donations from Iran!

/s