‘There is no such thing as a real picture,’ says Samsung exec.::Samsung’s head of product is now saying that every photo is fake. Samsung’s new Galaxy S24 phones increase the ways that the company uses AI to produce pictures.

So if every photo of a Galaxy S24 is a fake AI composition, does that mean nothing could be copyrightable or used as evidence?

Those will be fun days in court!

There was this photo recently from an iPhone that composed several shots of a bridal dress in a mirror and the reflections didn’t fit reality. Apparently Samsung has been ‘enhancing’ photos of the moon. So your statement isn’t only correct, it’s already reality for many manufacturers.

Whatever ai bullshit is going on with my camera, it needs to stop.

I got a license plate pic of someone who hit my car and was running.

It should have been mildly blurry, but accurate. If it was, I could have used a deblur filter / high pass, and dsp to reasonably guess. Especially between 3 pics. I.e., an “A” should have a triangle shape and be wider on bottom, “W” would be wider on top,

But each one, the license plate had different shaped squiggles in each picture. It’s like I took a picture of three different plates and blurred each one, then painted over it.

Very strange.

And your photos can’t be used as evidence.

Samsung photos probably shouldn’t be. What if you do a heavy zoom on a person far away and their AI just totally invents a new face for them?

It already invents different blurred strokes over license letters from afar

I think this is a great example of why a lot of anti-AI sentiment is really just about ChatGPT and Midjourney (and their direct competitors), not AI in general.

We should all oppose any regulation that would be so broad as to include this, or so off-target as to ban it but still allow the auto-HDR or noise reduction filters that have been ubiquitous for a decade or so. Photo preprocessing is nothing new, and there are multiple layers of it that you can’t disable on most phones.

Edit: Unless of course this was trained on copyrighted works like Midjourney, in which case some of the same complaints apply. But I’d be shocked if that were the case.

I used to work in medical imaging and weirdly pedantic people would say this all the time about digital photography. They say that even just the act of Debayering makes it artificial

I mean, even film is using crystals to make the picture so that isn’t real either.

And don’t get me started on the shit with how we actually see things. What photo? What reality? Is this a simulation?

Having tried to photograph purple flowers, I can confidently say that the human eye+brain combo messes around with the colors a lot. You can spend a lot of time trying to take the most authentic photo you can think of, but you’ll somehow still be dissatisfied with the end result, because that’s not what the flower looked to you in real life. Naturally, you’ll assume that your experience of the colors is exactly what real life is, but your camera still somehow comes to a different conclusion. Most likely, that’s because the camera doesn’t do all the fancy color corrections and distortion your mind does automatically. It’s also possible that cameras are really bad at seeing shades of purple.

Fun fact: These fancy automatic corrections also fail under certain circumstances and produce interesting visual illusions.

Everything is just perception man… Just waves of energy flowing down a massive cosmic river.

But somehow I don’t think this is Samsung’s official position.

Take the argument further. Our vision is just our rods and cones reacting to the light striking them. Which is kinda analogous to film.

But I’d also argue that we never really see a raw image. Our brain takes the raw image information and transforms it, and this is kinda analogous (although stretching it a bit) to the processing smart phones and digital cameras do.

So, you know we’re back to the matrix argument. What is real?

Your eyes really just are your brain.

deleted by creator

Is this meant to be something surprising? It applies to every photograph ever taken as a photograph it is just a representation of the photons that hit your film/sensor. Ansel Adams even makes a comment similar to the Samsung exec in his book ‘The Camera’.

That doesn’t mean there aren’t varying degrees of realism to that representation. A journalistic photo should show the scene as representatively as the photographer can make it with minimal editorializing. While an artistic photo may have any amount of editing that the photographer wants. Where there is an issue is when the photographer trying to create the first image unwittingly gets the second due to image processing.

it applies to every photograph ever taken as a photograph it is just a representation of the photons that hit your film/sensor

Well, Samsung is certainly pushing it to fake territory e.g. with moonshot photo where it replaces the moon in the photo with a static moon image. It’s not a representation of photons that hit the sensor anymore, it’s a complete switcheroo.

I was just listening to the episode of “cool people who did cool stuff” where Margret mentioned that “this is not a pipe” picture.

Though i think the samsungs ex’s arguement is a bit more nihilistic

Love Cool People who did Cool Stuff!

Phone cameras have been a boon for democracy, because it’s hard to deny something when visual evidence can be provided to defend against denial and dismissal.

With deep fakes coming down the pipeline, expect people to scream “DEEP FAKES” when visual evidence is prevented, an argument that becomes more legitimate with the passage of time.

Therefore, we’d need a way to create photos and videos with phone cameras that can not be deep faked, perhaps with a form of digital certification and signing, or cryptographic hashing, a fingerprint so to speak, that intrinsically linked to the digital photo.

That might mean a new raw and/or compressed digital format with the feature built-in, but also a standardised chipset to go with it.

The digital signature thing doesnt work when the device signing the photo has the built in capability to significantly manipulate the photo using AI (which is what samsung and friends are putting forth as a feature)

There could be many ways neither you, he or I could envision. Dismissing it off hand is self defeatist.

For instance, how about a permanent, one-time write storage, that can’t be overwritten and is directly written to from the CMOS, as a matter of law and regulation.

Such a concept should be developed acedemically, because Samsung has zero interest in giving you anything that empowers you, while you’re here on Lemmy, dismissing an important thing off-hand because you lack the imagination or even the curiosity to even achieve such a goal.

Always remember: being a defeatist is exactly what people want you to be, because then you are powerless.

Seek to empower people instead, and don’t dismiss things as impossible when you nor the Samsung exec have disproven any idea or notion with anything but the status quo problem - and the status quo can always change, in regards to hardware, software and technology.

Yeah- I don’t have any clue how you can prove it wasn’t edited, with a signature.

I mean, there’s a real point there, but it’s expressed feebly, and by a simpleton - or at least through a language barrier. When you perceive anything, it’s through your biological filters; we do not see what is “really there” but only what is relevant to our reality. The same is true of cameras - they’re designed to capture a similar range to what our nervous system sees. But to say “there is no real picture” is to import philosophical concepts which are not appropriate to the discussion of photography, which presumes a pristine state to be captured and recorded. You stop playing the “photography” game when you drag in concepts that you can’t simply “point” at and “shoot”.

There’s no language barrier, his name is Patrick Chomet and he’s fluent in English and lives in London. He’s also definitely not dumb. He’s making a cynical argument that since all images have some level of post processing that they should all be viewed as “fake” on a Boolean level while conveniently ignoring the magnitude of post processing.

Ignoring the magnitude and the intent.

I mean, even leaving out the philosophy, there’s a very real sense that we’ve always been choosing how we want our pictures distorted.

When we capture light, we need to make choices about what specta of light are represented most heavily, how much light to capture, how quickly to capture it, what order we capture it in, and how we map the light onto the capture media.

We readily accept a computer making choices about all these settings to give us a picture that’s more representative of how we see the scene that we’re photographing.

It doesn’t feel like too much of a stretch to extend that to account for the human perception being able to, effectively, apply these settings differently across the perceptive scene.

You can see details on a white person and black persons faces at the same time, which nieve film and digital systems have a weirdly hard time with because of how color works. To make that work we have to go well beyond point and shoot.Same goes for things like image stabilization, and techniques used for capturing a moving subject and the background at the same time.

Now, I’m not saying there isn’t a line where it stops being photography and starts being something else, but his statement sounds like a language barrier technical statement, rather than philosophical.

I mean they’ve been using “AI” filters for years. Absolutely atrocious software.

Ok. Next time I go to Europe, I’m buying a Fairphone.

Unless you’re happy with a very mediocre phone - please don’t. I very much applaud the idea behind fairphone, but the years I had my fairphone 3 were full of frustration.

What issues did you have with it?

I use an FP3 personally, on Android 10 at the mo (13 is available, but i’m planning to move to Lineage instead), haven’t experienced anything I would describe as a dealbreaker - especially compared to my previous device which was an S5.

On paper it looks rubbish, but compared to my S5 it’s night and day. That said, I wouldn’t suggest any ‘sustainable’ device to someone using a mainstream flagship like an S23 etc, as ‘sustainable’ devices typically always pick older components with the longest service/support life, not the latest and greatest. It’s a compromise I am fine with, but people who are very heavy/demanding power users should 100% look somewhere else or just keep using their current device IMO…

Actually, on the topic of FP issues, particularly for the target audience that i is maybe likely to use more FOSS apps on their device, is a bug that mis-clicks after a lengthy amount of time spent in apps that use Jetpack Compose for the UI (like Jerboa and Kvaesitso). I’m not familiar with that tech at all though so this is completely out of my depth. I’ve only ever noticed fellow FP users complaining about it. The fix is to force kill the app, which can get a little bothersome

Could you explain? What about the fairphone did you find frustrating?

Low end hardware made the user experience frustrating and the overall performance was poor. It’s annoying because the concept is good, but a phone that’s supposed to be your “long term phone” shouldn’t be painful to use after only months. It’s certainly very repairable - or seemed to be. I didn’t actually have to ever repair mine, but if I’m going to deliberately have a phone for a long time then I need it to stay off with specs that mean it’ll still perform after three or four years.

Got Fairphone 5 but I can only compare it to my old phone, a Samsung Galaxy A3 I’ve used for about 13 years. Main complaint is the speaker: points down and sounds worse than my old phone. 90Hz OLED looks great. Perhaps it’s mediocre too in comparison to modern phones but I want to avoid using proprietary software. Most phones might as well not even exist.

I wanted a case that covers the screen but Fairphone only have a side cover. Got lucky with one from a 3rd party but it doesn’t turn off the screen when I close the cover like my old phone case did. I assume that had a chip in it or there’s a software setting I’ve not found.

Also, I didn’t know I could get it with /e/ already installed so I’ve been trying out stock Android in the meanwhile.

Got lucky with one from a 3rd party but it doesn’t turn off the screen when I close the cover like my old phone case did. I assume that had a chip in it or there’s a software setting I’ve not found.

Samsung was pretty much one of the only manufacturers installing hall sensors under the display to detect the magnets in their flip cases. I think they stopped including that sensor around the time they got rid of the hardware home button. Their latest tablets still do include a case sensor AFAIK, not sure if it’s the same hall effect one or something else though.

As a side note I miss those cases with the small window, was pretty cool to be able to just flip the lid, see the time, then stuff the phone away

That’s a damn shame. I guess modern phones auto wakeup with fingerprint readers or face recognition?

I’m waiting for the FP to hit the NA market. I have run Nexus/Pixel devices exclusively for 12 years, and all kinds of custom roms over the years; 3 years now on GrapheneOS. But the repairability is a strong draw to the FP, and I believe it also allows bootloader unlocking and relocking with a different key, so software-wise it checks that box too. The only downside is hardware, especially the camera. But I’m still quite interested in it.

The hardware being very poor was the killer for me. Unless they improve starter specs or allow you to upgrade hardware I won’t ever buy another one.

Have you noticed a difference in camera quality between the pixel running the OS it ships out in and when running Graphene OS?

The graphene camera app is, honestly, hot garbage. At least it was when I switched like 3 years ago. I just use the Google camera with no internet access to it (permission) and bam, great photos, no data sharing.

Can you use google camera when you’re running graphene? How? I love the image quality on the pixel but dislike google way too much to use a google phone.

Yeah, it’s just an app, installed from the play store or sideloaded. It detects that it’s a pixel and the extra features get enabled like when installed on stock.

You lose the ability to preview your pictures from the camera when you don’t have google photos installed, but that’s true of stock too - and can be fixed by a stub app that pretends to be google photis, but simply reenables that camera shot preview. Be sure to disable auto updates for “g photos” so it doesn’t complain about a mismatched app.

I used to be all-in on G - devices, software, services. I even got to ride in a waymo vehicle during development and testing (under nda), a friend worked at waymo for several years (I helped him get the job!), and I was participating in studies for both hardware and software for G (also nda). Starting with… 2017, I bought a nas and started migrating to it. It’s not a quick process and there’s a definite learning curve, but I’ve been largely out of the G ecosystem since… 2019? I still buy my phones via the G store, and pay with the store line of credit. Still upload stuff to YouTube, I am a local guide on Maps too. But contacts, calendar, gmail, drive/photos, domains… I’m free, my data is on my hardware in my possession, or at least under my control (domain, email).

GrapheneOS isn’t perfect, but it’s close. My Pixel Watch works with it, they got Android Auto working a couple weeks ago. The only broken app I have is PayPal, and it was working previously, so I think it’s a pp issue and not graphene - regardless it’s a small inconvenience. If you get a day or two and feel ambitious, give it a go. It’s more work, absolutely, but it’s more control and privacy.

Thanks for the detailed reply. I am planning to test drive GrapheneOS. The things making me doubt the switch is basically my payment applications (I do nearly all my payments via Gpay or similar apps), my camera, and compatibility with my fitness app (Garmin Connect). Other than that I don’t really have any worries. I suck at changing my routines so the change-over period might be painful but I’m thinking of carrying around two phones during it to reduce the effects, though I don’t know if that is good or bad for quicker transitioning.

I’d really like to hear more about your experience. When did you get it? Was was it about the phone that wasn’t up to your expectations?

I answered above replying to another post.

I saw it. Thanks. I’ll have to reevaluate my choices I think.

Do they have a store there? Planning a trip to Europe

I think it’s in most mobile phone stores. I’m not sure though. All I know is that it’s not here in Canada.

Most mobile phone stores sadly only sell big brands noeadays. I’ve been looking for a store that sells Sony, haven’t found one yet

Now that’s surprising. Sony isn’t some small startup brand.

It is. And this isn’t just in Europe. I look wherever I go when I see electronics shops, I just want to try one before buying it

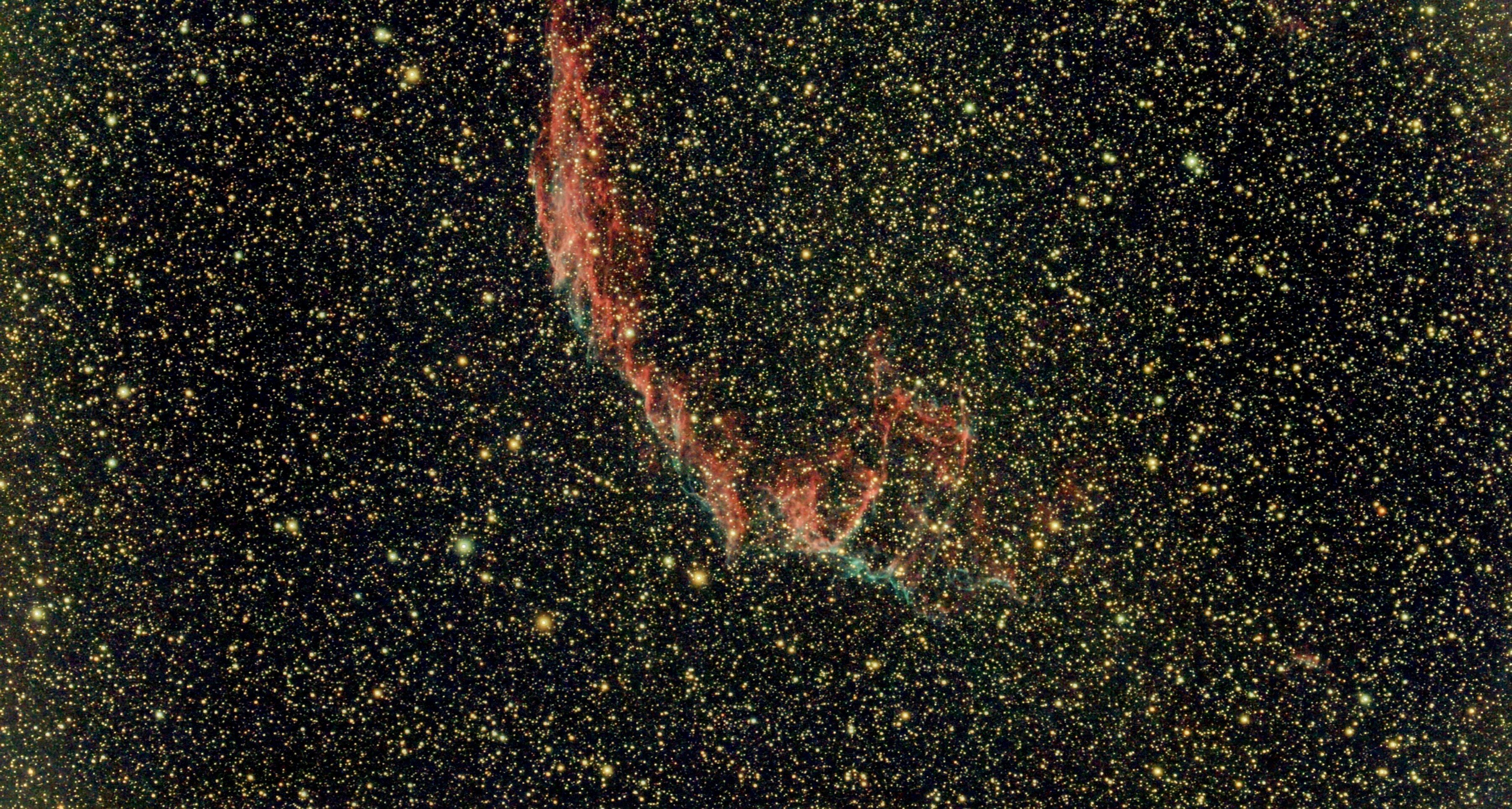

If you’re using a camera as a scientific instrument, normally you try to make the picture as real as possible. Conjuring up imaginary detail just isn’t acceptable.

However, you can use false color to highlight whatever it is you’re interested in, and this is a common practice in areas such as electronics microscopy, thermal imaging and astronomy. Even that might not be acceptable is you happen to be interested in the color of different things.

In normal everyday photography, the user usually isn’t interested in authentic textures or colors. Fake internet points are far more valuable to most users, and Samsung knows this.

Yeah, I just wrote someone else’s dissertation on this topic. I think it’s safe to say some are less real than others, though.

This is the best summary I could come up with:

How does Samsung defend itself against the notion that its phone cameras are spitting out fake AI photos of not only the Moon, but most anything else you’d care to aim them at these days?

Samsung EVP Patrick Chomet told TechRadar recently:

It’s a question that we’ve been exploring on The Verge and The Vergecast for years as companies like Apple, Google, and Samsung increasingly combine multiple frames across multiple cameras to produce their final smartphone images, among other techniques.

Now, of course, the rise of generative AI is really bringing the debate to a head — and Samsung’s new Galaxy S24 and S24 Ultra are the latest phones to market that feature.

He told TechRadar that the industry does need to be regulated, that governments are right to be concerned, and that Samsung intends to help.

In the meanwhile, he says Samsung’s strategy is to give consumers two things it’s decided they want: a way to capture “the moment,” and a way to create “a new reality.” Both use AI, he says — but the latter get watermarks and metadata “to ensure people understand the difference.”

The original article contains 248 words, the summary contains 187 words. Saved 25%. I’m a bot and I’m open source!

watermarks and metadata

It’s a start at least. Now make them impossible to remove.

In the 90s someone proved–mathematically–that invisible watermarks (e.g. hidden in metadata or in the pixel data itself) in photos would always be removable. I searched for it but I couldn’t find it but it should be obvious: Merely changing the format of an image is normally enough to destroy such invisible watermarks.

Basically, the paper I remember proved that in order for a watermark to survive a change in format/encoding it would need to be visible because the very nature of digital photo formats requires that they discard unnecessary information.

Also, I’d like to point out that it’s already illegal to remove watermarks (without permission) while simultaneously being trivial (usually) for AI tools like img2img to remove watermarks.

Oh, I know it’s impossible to do something like that. There’s a reason that whenever I upload a photo I always crop it. That way, if there’s ever a question about copyright, I can simply reveal a portion that was cropped. Likewise, someone taking such a photo should keep the untouched, uncropped original in a safe place. You know spicy photos will be used in a questionable way, so this will make it easier for the original owner to refute any modifications.

Someone will just use ai to remove them though

deleted by creator

So the phone you’ve never used is rubbish? And you know this how exactly?

I don’t even care that you don’t like Samsung phones, but 1) knowingly buying mid-range and expecting something amazing and 2) calling a device rubbish despite admitting never using it, is just asinine.

deleted by creator