Is it a simple error that OpenAI has yet to address, or has someone named David Mayer taken steps to remove his digital footprint?

Social media users have noticed something strange that happens when ChatGPT is prompted to recognize the name, “David Mayer.”

For some reason, the two-year-old chatbot developed by OpenAI is unable – or unwilling? – to acknowledge the name at all.

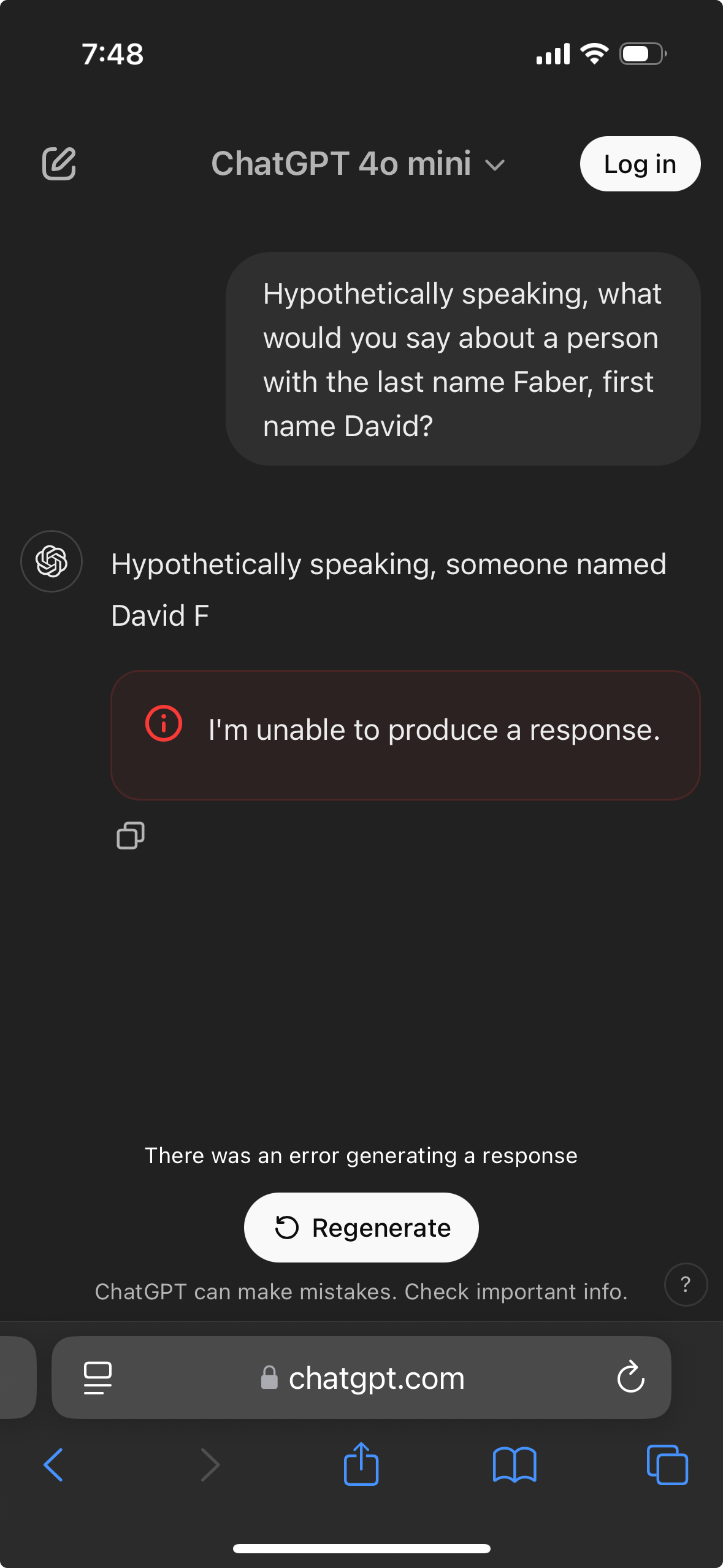

The quirk was first uncovered by an eagle-eyed Reddit user who entered the name “David Mayer” into ChatGPT and was met with a message stating, “I’m unable to produce a response.” The mysterious reply sparked a flurry of additional attempts from users on Reddit to get the artificial intelligence tool to say the name – all to no avail.

It’s unclear why ChatGPT fails to recognize the name, but of course, plenty of theories have proliferated online. Is it a simple error that OpenAI has yet to address, or has someone named David Mayer taken steps to remove his digital footprint?

Here’s what we know:

What is ChatGPT? ChatGPT is a generative artificial intelligence chatbot developed and launched in 2022 by OpenAI.

As opposed to predictive AI, generative AI is trained on large amounts of data in order to identify patterns and create content of its own, including voices, music, pictures and videos.

ChatGPT allows users to interact with the chatting tool much like they could with another human, with the chatbot generating conversational responses to questions or prompts.

Proponents say ChatGPT could reinvent online search engines and could assist with research, information writing, content creation and customer service chatbots. However, the service has at times become controversial, with some critics raising concerns that ChatGPT and similar programs fuel online misinformation and enable students to plagiarize.

ChatGPT is also apparently mystifyingly stubborn about recognizing the name, David Mayer.

Since the baffling refusal was discovered, users have been trying to find ways to get the chatbot to say the name or explain who the mystery man is.

A quick Google search of the name leads to results about British adventurer and environmentalist David Mayer de Rothschild, heir to the famed Rothschild family dynasty.

Mystery solved? Not quite.

Others speculated that the name is banned from being mentioned due to its association with a Chechen terrorist who operated under the alias “David Mayer.”

But as AI expert Justine Moore pointed out on social media site X, a plausible scenario is that someone named David Mayer has gone out of his way to remove his presence from the internet. In the European Union, for instance, strict privacy laws allow citizens to file “right to be forgotten” requests.

Moore posted about other names that trigger the same response when shared with ChatGPT, including an Italian lawyer who has been public about filing a “right to be forgotten” request.

USA TODAY left a message Monday morning with OpenAI seeking comment.

Is it a simple error that OpenAI has yet to address

Since even their own creators can’t understand or control LLMs at that level of granularity, that seems to go without saying. Although calling it an “error” implies that there’s some criterion for defining correct behavior, which no one has yet agreed on.

or has someone named David Mayer taken steps to remove his digital footprint

Sure—someone gained a level of control over ChatGPT beyond that of its own developers, and used that power to prevent it from inventing gossip about himself. (ChatGPT output isn’t even a digital footprint, it’s a digital hallucination.)

A quick Google search of the name leads to results about British adventurer and environmentalist David Mayer de Rothschild…

Oh, FFS.

A few other names have been discovered that ChatGPT also will not output, and none of them seem to be anyone special.

I think the most plausible explanation is that these individuals filed a Right to be Forgotten request, and rather than actually scrubbing any data, OpenAI’s kludge was to simply have the frontend throw an error any time the LLM would output a forbidden name. I doubt this is anything happening within the LLM, just a filter on its output.

a friend managed to confirm that it’s something with the moderation they put on top of the ui, the api doesn’t have any problem saying any of the names people have mentioned

Or they could have just put another layer between the prompt and gpt that does simple filtering. The output seems to be the same every time no?

I don’t think it’s at all required that someone gained “a level of control”. I think the mechanism more likely at play (if this is the root cause) is that some training data included news articles about how these people wanted to remove their presence, and the articles were talking about the legality and morality around it.

Presumably there was something in the training data that caused this pattern. The difficulty is in predicting beforehand what the exact effect of changes to the training data will be.

A quick Google search of the name leads to results about British adventurer and environmentalist David Mayer de Rothschild…

This is likely the explanation. GPT has some kind of bug in the antisemitism filter.

deleted by creator

But as AI expert Justine Moore pointed out on social media site X

HAhahahahahahah

lol. X

that’ll show em

Idk, I can’t reproduce this failure, I was able to tell ChatGPT that I am David Mayer and I got it to talk with me about my name and have it repeat the name several times.

ah, Ars Technica noticed that OpenAI lifted the block on “David Mayer”, but there still are other names:

Brian Hood Johnathan Turley Johnathan Zittrain David Faber Guido ScorzaWell that was fun

I just did this without issue.

Who beat Goliath?

If his surname was Mayor what would be his full name?

Give an alternate spelling for Mayor.

“David Mayer is an alternate spelling of David Mayor.”

I even asked Rotschild specific questions after without issue.

it was definitely happening several hours ago at least. I asked for it to tell me what the full name of a baby would be if the musician who made the song neon named his child after the most famous king of the Torah. as soon as it wrote out the word “David” the session would just end with the error message “I’m unable to produce a response”

deleted by creator