You can look into Cloudflare’s CSAM setting, but I’m not exactly sure what it does.

I don’t understand how a web host is legally responsible for what their users post as long as there’s active moderation removing it in a timely manner.

You are correct, there is safe harbor provisions on the matter. There is a legal responsibility to report and store the content securely when it is reported as an admin.

It’s like it’s not enough that you deal with all the technical shit, updating to new versions, checking shit out from GitHub, running builds, paying for the goddamn thing, then you are also responsible for babysitting content? Fuck that. Unless you have a good group of mods/admins it is really difficult to do.

That’s why you either sell your users to the advertisers or charge a monthly subscription. Free internet doesn’t work.

The reality of hosting reddit 2.0 is finally starting to sink in.

I can do all of the above, except for police content.

And Reddit of course had unpaid mods to do that.

So like I say, it can be done, you just need the right team of mods/admins for your own server.

Cool, you and your 5 buddies have a great time. Some of us would like to see a viable alternative to reddit who respects privacy, and doesn’t crash every other day.

Fediverse is going to be known as a kiddie porn haven with the level of professionalism and maturity they have with the major servers.

I have yet to see a single problem with Lemmy over months of daily use. An instance may have crashed in that time but I didn’t notice not seeing certain instances when scrolling, and I don’t seek out particular communities. Helps that I’m hosted on a less popular instance, and the lemmy.ca admins seem to run a tight ship.

I block a couple of communities a day, but that seems to be expected. I also haven’t seen any kiddie porn.

Less discussion than Reddit, and less specific communities, but that’s been easy to forgive because well, fuck Reddit.

If an alternative pops up at some point, I’ll be sure to give it a try. Lemmy is doing just fine for me.

keep in mind that this does not apply to every country in the world.

FYI in USA the law CDA section 230 only preempts state law but not federal law. If something which is federally illegal lands on your server you need to deal with it ASAP

I would love to have the EFF chime in, but there are some protections for you as a host under the Online Copyright Infringement Liability Limitation Act (OCILLA) - or safe harbor provision in the USA.

As to how that has been tested legally on federated content, I don’t know. Perhaps another elder of the internet can tell me how Usenet servers handle it.

You are right, there is safe harbor protections here. It’s a legal mess that must be navigated carefully. We will see how things progress.

While correct, you still may end up having to deal with the law about it. The whole “you can’t beat the ride” thing. Could be a ton of hassle and legal fees.

What are you implying here? That @gabe should never have bothered with running a server? What about the server you are connected to right now? Should they shut down because of what may travel across it?

No.

They’re protected under the same rules as somebody running a WiFi hotspot at a coffee shop. As long as they are doing everything within reason to be a good steward of their local network (which is what Gabe is doing) then they are protected.

Doesn’t seem like he was implying anything. Just stating the fact that part of the burden of citizenship is sometimes having to interact with law enforcement, maybe even go to trial, even if you’ve done nothing wrong.

Pretty eloquent way of saying what I was trying to express. Thanks

I’m not suggesting anyone should or shouldn’t do anything, nor that I’m not grateful for people that do. Just saying it’s a potential downside that people should seriously consider before hosting any public access systems.

They’re protected under the same rules as somebody running a WiFi hotspot at a coffee shop. As long as they are doing everything within reason to be a good steward of their local network (which is what Gabe is doing) then they are protected

Hopefully, yeah. But again, there’s still this potential of the coffee shop of having all their equipment seized and having to deal with a law enforcement investigation and maybe even the courts. Even if the risk of actual jail time and monetary penalties is low, it’s something people should consider before doing it.

This is one of the reasons I’m not running a public access network or TOR exit node at home even if I think those are worthwhile things to do.

FYI not all jurisdictions deal with website hosting (storage and distribution) as equivalent to hotspot/ internet services (dumb relay)

LW is not hosted in USA.

This is the reason that vlemmy.net shutdown.

Why are people like this

Because they can.

And it’s easy. Society spends so much time and effort making life easier via improvements like simple image uploading and sharing, so of course some piece of shit will use it for this. Just a few clicks and they’ve created headaches for thousands of people. It requires no ability so the barrier of entry is as low as being the kind of trash that likes that stuff.

And because of trauma, I assume (though I could be wrong).

Here I thought I could create a server and then use that as a instance only to hold my profile where I could then use that to interact across the fediverse

You can absolutely do that, just make the profile registration private

But the federation issue with CSAM… I don’t want those issues.

deleted by creator

Yes, but people can still browse content from your instance without logging in. There is nothing stopping people from viewing illegal material through your instance.

Section 230 makes this but an issue. It would be like suing the phone company. Especially if you don’t moderate. If you moderate then it can be said what is left had your endorsement. If you don’t moderate, then you are simply a victim of vandalism.

Section 230 is only applicable to the US

Free countries have an equivalent, or else free online discussion space would be impossible. The section 230 compromise is inevitable or the internet would perish.

Ok, I think I might be misunderstanding the issue; so it’s more of bad actors rather than a copy of images in cache?

Is there some guide to it? I was thinking the same!

Yeah I have wanted that from day one. I want it to work like mail my identity on my domain that I can bring anywhere, store my comments, posts, subscriptions and that’s it, maybe direct messages or explicitly saved posts. Not every damn post that I read / subscribe.

I don’t know what’s going on and I’m afraid of clicking the link…

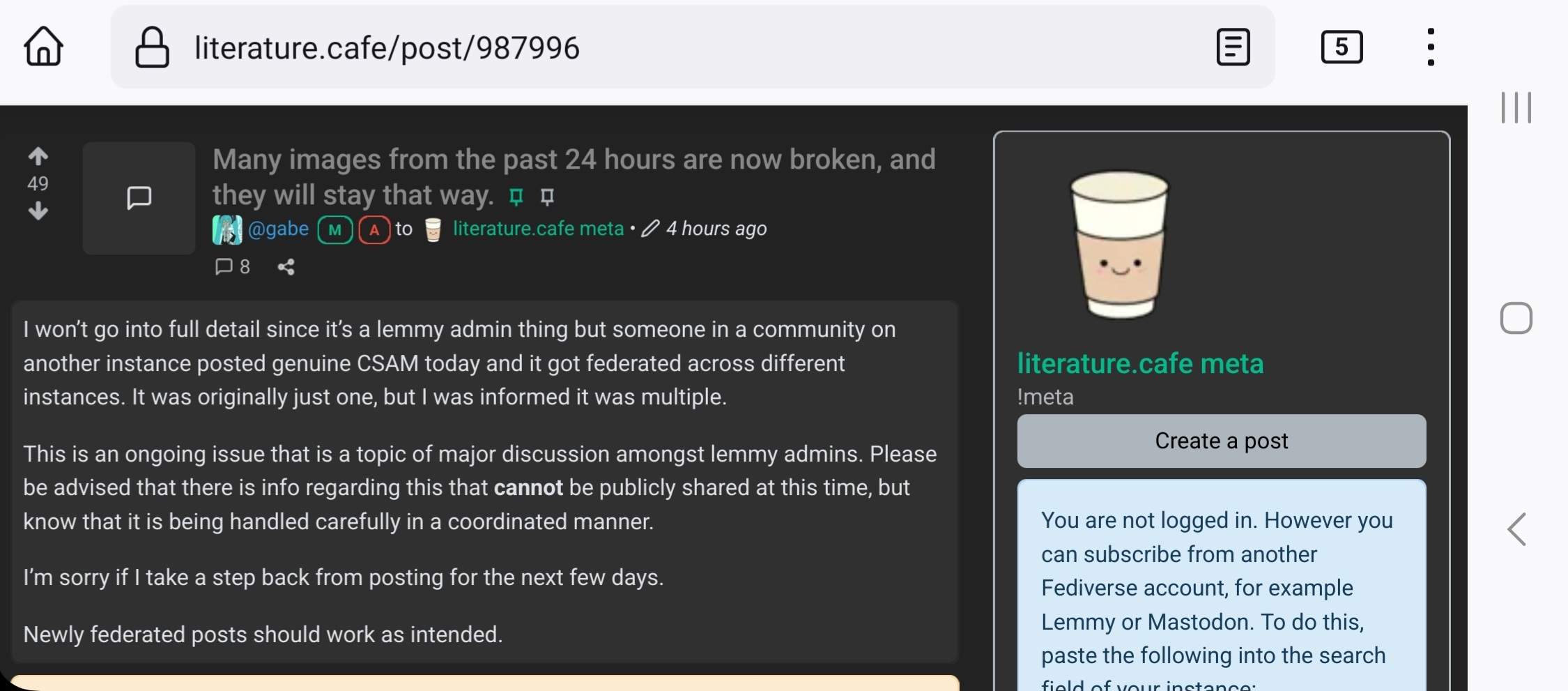

It’s an admin post talking about why some images are broken

Can I ask what the acronym means?

I’m too paranoid to do an internet search to figure it out, but I have a hunch.

I didn’t know either but I took one for the team, regrettably. Child Sexual Abuse Material.

Damn. I guessed it was something like that.

That’s so disgusting, some fuckers are sick.

Thanks for replying!

It’s horrific. :(

When did the term change from CP to CSAM? Seems like everyone changed overnight.

Iirc the reason it changed was because it’s a more accurate description of what the material contains, and because actual pornography is supposed to be consentual by nature, even when it’s depicting an imitation of a non-consensual act. However, kids are incapable of consenting. Saying that videos of them is porn feels too much like it puts it on the same level as adult porn, when they’re not at all the same. If someone released a video of someone being raped I think most people would consider it distasteful to call a real rape video “pornography”.

I can understand that reasoning tbh.

Makes sense, and it’s also a more unique acronym

Thanks, I also looked it up and for mostly links about the Belgian government login.

That’s because “C-SAM” sounds like “sesame” in French.

This kind of issue is why pawb.social is not open registration. These low effort trolls cause a lot of problems, and i don’t want my server to be responsible for this crap.

Doesn’t matter, you are still effected by this issue since any images from communities you or anyone else on your instance follows get cached on your server, doesn’t matter if you actually looked at them or not. I would advise you to look into this if you are the instance admin and didn’t know this by now.

Correct! I should have specified I mean to prevent our community from being the source of the posts.

Maybe Mozilla could help. I know they’re trying to help make the net less of s toxic place and this is some serious thing.

Wait are images federated too? Can’t u disable it?

I think only links to the images are federated.

EDIT: I am from the programming.dev instance, and this post links to https://lemmy.ca/pictrs/image/22411ac1-3f76-4904-9f0e-8522311c4ee1.jpeg which seems to come from lemmy.ca and not lemmy.ml where this community is originally from.

Maybe it was cross posted? Not sure.

The thumbnails are hosted on your home instance.

And the content itself? And it’s hosted on the home instance of the uploader or on every home instance and then served to their own users from that one?

It’s just hosted on their home instance. If all the content would be synced, it would be impossible to set up smaller and personal instanced without massive storage capacities.

I see, thanks for the explanation.

Don’t have open signups

Bruh I saw that shit. It was fucked up

Please clear your browser cache and take care of yourself in whatever you need to. I am so sorry you had to see it.

Thanks. Can’t unsee it. It was in my app (Memmy), but I should probably clear that cache.

On Android you can long press an app icon to get to app properties (from launcher or app switcher) and look for storage and then wipe cache

Wouldn’t clearing it immediately rather than reporting it to the police be ill advised?

My guess is that someone noticed that Lemmy doesn’t yet have as robust moderation tools as Mastodon and decided they’d federate "NoNoNo"1 images all over the place just to be a troll

1

CSAM

CP

Very illegal and naughty images of kids

Just say some incest rape fetish shit not 10 minutes ago too. People are fucked in the head.

On memmy rn and the spoiler works fine

“Memmy can’t render this spoiler” is something added by Memmy when it errors. OP wrote something else.

Actual Comment:

Oh LMAO I can’t believe I thought otherwise. Thx for letting me know.

No problem! I thought the same until I started wondering why everyone was saying that.

I thought about hosting my own instance, but now I’m definitely not…

I thought the worst that could happen was being ignored… apparently not.

you could host your own instance and just not have new users sign up, by keeping registration private.

But if you federate with an instance that gets the material, then you will also get it.

This is what I’m thinking of doing. Lets me host my own content and not rely on other instances to stay alive.

What does CSAM exactly mean? (I understand the point of the meme completely just never heard of such abbreviation.

It’s child porn, and as horrifying as you’ve been told it is. Some scumbag trolls were posting it on lemmy.world’s memes sub and so .world finally decided to close open signups.

When did we switch to that instead of CP?

a) “CP” is a very online phrase that I imagine hasn’t permeated popular culture for good reason

b) calling it “pornography” is tacitly implying it is arousing and/or serves a purpose, by calling it “abuse material” you remove any positive connotations

I think being more specific is also a good thing. Two letter acronyms are too broad. As CSAM, it’s unambiguous what it refers to. But CP means many things. Eg, in software dev, it’s often used for “control plane”. Some video games (eg, Pokemon Go) use it for “combat power”. I think ESO used it as “champion points” (though might have been a different MMO).

Ah, makes sense now.

Porn is a legal thing that normal people enjoy. The term CSAM takes a stance that it is always abuse. I think they are basically interchangeable but CSAM is the currently preferred term.

But it is always abuse regardless of the term, so a new term is wildly unnecessary

Sometimes, terms need changing to separate it from something else. Porn in itself is legal and fine. When adding children in the mix it’s easy to get caught up in the porn part of the discussion rather than the child part.

Separating the terms puts the focus more on the child abuse part.

I’ve literally not once seen anyone “get caught up in the porn part” what

Have you been paying attention to the debate at all?

Many bills in the US have been proposed to mitigate child porn by just targeting porn in general.

Why are you opposed to using terms that are accurate?

Bullshit, child porn is “cp”. CSAM means Child Sexual Abuse Material

That’s absurd. People aren’t stupid. We’re capable of understanding context and playing semantical games with something so serious is quite honestly pretty offensive.

What would you say is the difference? I feel like the terms are interchangeable. The comment you replied to didn’t give the exact abbreviation but it gave the essence of what is meant by the picture.

By OC trying to imply a difference, one could be led to assume that OC believes there is some part of the illegal material that they do not consider abuse.

I vehemently disagree with that line of thinking. It is abuse, and that is why it is illegal.

Who in their right mind thinks calling CP is validating it in any way? Just because some morons decided to make a politically correct term for it doesn’t change what it is.

The politically correct term CSAM is to differentiate it from ordinary porn which is legal and at least somewhat socially acceptable and ensure that people understand that when children are involved, it is always abusive. The terms mean the same thing, but being precise with language is important.

People already understand that. 🤦

“Child sexual abuse material”

Ohh, I though the guy just misspelled scam :b

And fuck all of the lemmy users who demand that instance owners take on the risk that the users demand.

No, unpaid volunteers do not want jailtime for the child porn you wish to post.