Pretty sure Valve has already realized the correct way to be a tech monopoly is to provide a good user experience.

Personally, I think Microsoft open sourcing .NET was the first clue open source won.

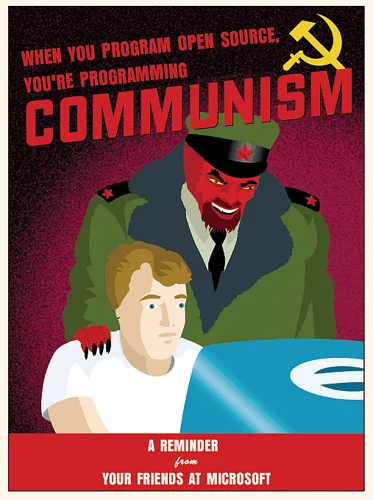

Time to dust off this old chestnut

I remember this being some sort of Apple meme at some point. Hence the gum drop iMac.

Deepseek is not open source.

The model weights and research paper are, which is the accepted terminology nowadays.

It would be nice to have the training corpus and RLHF too.

The training corpus of these large models seem to be “the internet YOLO”. Where it’s fine for them to download every book and paper under the sun, but if a normal person does it.

Believe it or not:

A lot of other AI models can say the same, though. Facebook’s is. Xitter’s is. Still wouldn’t trust those at all, or any other model that publishes no reproduceable code.

I wouldn’t call it the accepted terminology at all. Just because some rich assholes try to will it into existence doesnt mean we have to accept it.

But then, people would realize that you got copyrighted material and stuff from pirating websites…

well if they really are and methodology can be replicated, we are surely about to see some crazy number of deepseek comptention, cause imagine how many us companies in ai and finance sector exist out there that are in posession of even larger number of chips than chinese clamied to have trained their model on.

Although the question rises - if the methodology is so novel why would these folks make it opensource? Why would they share results of years of their work to the public losing their edge over competition? I dont understand.

Can somebody who actually knows how to read machine learning codebase tell us something about deepseek after reading their code?

Hugging face already reproduced deepseek R1 (called Open R1) and open sourced the entire pipeline

Did they? According to their repo its still WIP https://github.com/huggingface/open-r1

They are trying to make it accepted but it’s still contested. Unless the training data provided it’s not really open.

the accepted terminology

No, it isn’t. The OSI specifically requires the training data be available or at very least that the source and fee for the data be given so that a user could get the same copy themselves. Because that’s the purpose of something being “open source”. Open source doesn’t just mean free to download and use.

https://opensource.org/ai/open-source-ai-definition

Data Information: Sufficiently detailed information about the data used to train the system so that a skilled person can build a substantially equivalent system. Data Information shall be made available under OSI-approved terms.

In particular, this must include: (1) the complete description of all data used for training, including (if used) of unshareable data, disclosing the provenance of the data, its scope and characteristics, how the data was obtained and selected, the labeling procedures, and data processing and filtering methodologies; (2) a listing of all publicly available training data and where to obtain it; and (3) a listing of all training data obtainable from third parties and where to obtain it, including for fee.

As per their paper, DeepSeek R1 required a very specific training data set because when they tried the same technique with less curated data, they got R"zero’ which basically ran fast and spat out a gibberish salad of English, Chinese and Python.

People are calling DeepSeek open source purely because they called themselves open source, but they seem to just be another free to download, black-box model. The best comparison is to Meta’s LlaMa, which weirdly nobody has decided is going to up-end the tech industry.

In reality “open source” is a terrible terminology for what is a very loose fit when basically trying to say that anyone could recreate or modify the model because they have the exact ‘recipe’.

the accepted terminology nowadays

Let’s just redefine existing concepts to mean things that are more palatable to corporate control why don’t we?

If you don’t have the ability to build it yourself, it’s not open source. Deepseek is “freeware” at best. And that’s to say nothing of what the data is, where it comes from, and the legal ramifications of using it.

The term open source is not free to redefine, not has it been redefined.

DeepSeek shook the AI world because it’s cheaper, not because it’s open source.

And it’s not really open source either. Sure, the weights are open, but the training materials aren’t. Good look looking at the weights and figuring things out.

True, but they also released a paper that detailed their training methods. Is the paper sufficiently detailed such that others could reproduce those methods? Beats me.

Wall Street’s panic over DeepSeek is peak clown logic—like watching a room full of goldfish debate quantum physics. Closed ecosystems crumble because they’re built on the delusion that scarcity breeds value, while open source turns scarcity into oxygen. Every dollar spent hoarding GPUs for proprietary models is a dollar wasted on reinventing wheels that the community already gave away for free.

The Docker parallel is obvious to anyone who remembers when virtualization stopped being a luxury and became a utility. DeepSeek didn’t “disrupt” anything—it just reminded us that innovation isn’t about who owns the biggest sandbox, but who lets kids build castles without charging admission.

Governments and corporations keep playing chess with AI like it’s a Cold War relic, but the board’s already on fire. Open source isn’t a strategy—it’s gravity. You don’t negotiate with gravity. You adapt or splat.

Cheap reasoning models won’t kill demand for compute. They’ll turn AI into plumbing. And when’s the last time you heard someone argue over who owns the best pipe?

Apparently DeepSeek is lying, they were collecting thousands of NVIDIA chips against the US embargo and it’s not about the algorithm. The model’s good results come just from sheer chip volume and energy used. That’s the story I’ve heard and honeslty it sounds legit.

Not sure if this questions has been answered though: if it’s open sourced, cant we see what algorithms they used to train it? If we could then we would know the answer. I assume we cant, but if we cant, then whats so cool about it being open source on the other hand? What parts of code are valuable there besides algorithms?

The open paper they published details the algorithms and techniques used to train it, and it’s been replicated by researchers already.

So are these techiques so novel and breaktrough? Will we now have a burst of deepseek like models everywhere? Cause that’s what absolutely should happen if the whole storey is true. I would assume there are dozens or even hundreds of companies in USA that are in a posession of similar number but surely more chips that Chinese folks claimed to trained their model on, especially in finance sector and just AI reserach focused.

deleted by creator

Sauce?

It’s open sauce.

internet

Elaborate? Link? Please tell me this is not just an “allegedly”.

extra time which Im not sure I want to spend

It’s your burden of proof, bud.

https://www.youtube.com/watch?v=RSr_vwZGF2k This is what I watched. I base my opinion on this. Im not saying this is true. It just sounded legit enough and I didnt have time to research more. I will gladly follow some links that lead me to content that destroys this guys arguments

This is after all, a court of law.

Cope be strong in this one lol

It’s time for you to do some serious self-reflection about the inherent biases you believe about

AsiansChinese people.WTF dude. You mentioned Asia. I love Asians. Asia is vast. There are many countries, not just China bro. I think you need to do these reflections. Im talking about very specific case of Chinese Deepseek devs potentiall lying about the chips. The assumptions and generalizations you are thinking of are crazy.

And how do your feelings stand up to the fact that independent researchers find the paper to be reproducible?

Well maybe. Apparntly some folks are already doing that but its not done yet. Let’s wait for the results. If everything is legit we should have not one but plenty of similar and better models in near future. If Chinese did this with 100 chips imagine what can be done with 100000 chips that nvidia can sell to a us company